IBM’s new report on the performance of VM and Linux Containers from July 21, 2014, while primarily demonstrating the relative performance advantages of Linux Containers (such as Docker), contains a great deal of current performance information on VMs that is extremely relevant, especially if you are running a CPU or I/O intensive workload, such as ATG Oracle Commerce. I would like to share some of the highlights of the report, especially the sections most relevant to anyone considering running ATG in a virtualized environment.

The report goes into detail on a number of performance issues with virtualization as well as calls out the security concerns around multi-tenant virtualization solutions. In this article I would like discuss performance drawbacks mentioned in the repot.

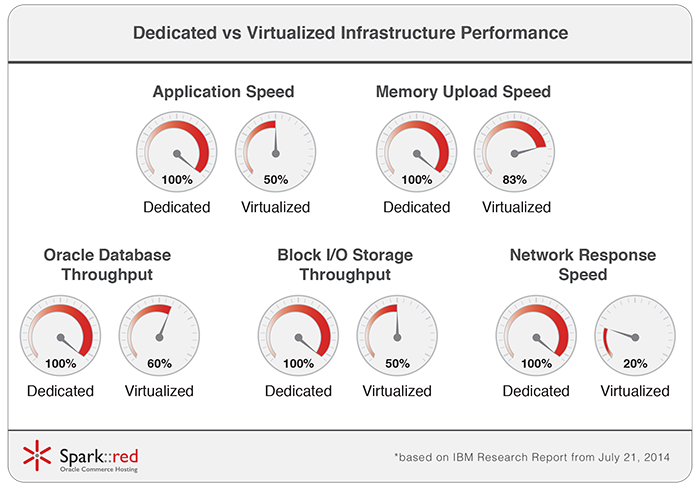

Application Speed

Section 3.1 of the report covers Linpack, a CPU intensive, memory read/write heavy, multithreaded performance benchmarking tool. This benchmark is an excellent one for our purposes and the type of workload is very similar to your typical production ATG Oracle Commerce application (although missing the high I/O traffic of an ATG Oracle Commerce cluster). The performance overheads of abstracting basic hardware resources such as memory and CPU cores are clear here, with the KVM virtualized benchmark running roughly twice as slowly as native on the same hardware. That’s a 50% performance penalty, and would require twice the physical (and likely logical) infrastructure footprint for the production cluster to sustain the same performance as native with any given traffic watermark.

- 50% speed reduction

Memory Upload Speed

While the STREAM memory bandwidth test in section 3.2 shows that virtualization has a very minor impact on performance, the test isn’t as relevant for eCommerce type workloads as section 3.3, since it’s using linear memory access, allowing for prefetching to solve the memory access latency issues that virtualization introduces. The Random Access memory test in section 3.3 is more relevant, as it takes latency as well as memory bandwidth into consideration.

In illustrations to this section you can see that on single socket systems the memory virtualization introduces a 17% performance penalty on memory access due to adding an addition memory page table walk. Multi-socket systems suffer a similar issue even without virtualization, so the impact there is negligible, although performance is markedly worse than on single socket systems.

- 17% performance penalty on memory access

Network Latency

We see a similar scenario in sections 3.4 and 3.5 related to networking. Bandwidth impacts are minimal, although the testers are using virtio to minimize overhead, which may or may not be applicable to your available virtualization setup. However, latency impacts are significant, due to the additional layers added by the virtualized network interface. Latency for single packet transactions was 80% worse when virtualized. This is a massive difference.

Section 3.7 talks about Redis, a high performance NoSQL data store. At Spark::red we have Redis deployed by several clients, and Oracle Coherence should be a similar application profile, so it’s an especially relevant benchmark for us. The network latency discussed earlier in section 3.5 rears its ugly head here, giving the VM based Redis server substantially worse throughput.

- 80% latency increase for single packet transactions

Block I/O Throughput

Section 3.6 covers the performance of block storage I/O, which is used for things like the SAN backing your Oracle Database cluster, a critical performance area for an ATG cluster. Again, while sequential reads and writes allow prefetching and caching to cover up the overhead of virtualization, a more realistic test involving random access reads, writes, and mixed I/O operations demonstrates a 50% performance penalty. Worse than that, due to the virtualization layer, CPU load is much higher on the KVM test, further reducing the available power for your applications. You can also see that not only is the latency twice as bad when virtualized under low loads, the latency penalty actually becomes much worse under higher loads, getting to 3x-4x worse than native.

- 50% performance decrease

Oracle Database Throughput

Section 3.8 covers MySQL performance. While the Oracle Database is obviously different than MySQL, they are similar products, with similar workloads, and similar resource utilization profiles. Therefore, when you see that KVM’s overhead is 40% or worse throughout the tests due to network and CPU overhead, you can imagine that you don’t want to run Oracle Database virtualized either.

- 40% KVM’s overhead

As the summary in Section 5 notes, “KVM is less suitable for workloads that are latency-sensitive or have high I/O rates. These overheads significantly impact the server applications we tested.” Oracle ATG Commerce applications, or really any eCommerce applications, are absolutely latency sensitive and high I/O. This backs up all of the data we’ve seen first hand as well as anecdotally. While there are some companies running their eCommerce platform on VMs, the performance impact, and additional hardware costs required to offset that impact, are a known downside. For most companies the upsides are either too few, or too difficult to practically take advantage of, for virtualization to be a viable solution. At least at this point in time.

At Spark::red, far from being anti-VM or slow to embrace Cloud, we see great value in virtualization for some application types and workloads. And while we wish ATG was on this list, as it would make our lives easier in several ways, the reality is that ATG is a poor fit for virtualization at this time. The performance, security, and cost overheads when added to the complexities around adding or removing ATG servers from a cluster mean that for most companies, our best practices are still to utilize dedicated hardware.